WHY COMPANIES NEED TO MASTER THE ART OF NOT INNOVATING. IN OTHER WORDS, THE ART OF EXNOVATION – THE OPPOSITE OF INNOVATIONGood morning world. The mother of all anti-thesis theorems is here. Well,

umm, it was already there since the past few years. Actually it was in 1996 when I conjured up this term called exnovation – which I defined as the opposite of innovation – and presumed that I had arrived on the global management scene; well, had not I finally created a better mousetrap? 15 years later, I see that the term exnovation is still known to almost zero individuals on this plant (

‘cept me of course), and where known has taken up definitions that I never intended – and of course, nobody’s beaten their way up to my door yet. And that’s when I decided to give it one more try – define the term appropriately so that organisations realize the need to necessarily incorporate exnovation as a critical process within organisational structures.

I accept, in the present times, nothing excites corporate junkies more than the concept

of innovation. Who in heavens would care about exnovation for god’s sake?! Would you wish your company to come out tops on the World’s Most Innovative Companies’ lists or would you wish to be the numero uno on the exnovation charter – in other words, the world’s topmost ‘non-innovating’ company? One doesn’t need to think too deeply to get the answer to that. Frankly, the term exnovation was perhaps doomed from its very definition.

And reasonably too. Iconic CEOs have grown in fame because of being innovative. How many CEOs would you know of in the world who are worshipped because they exnovated? The answer might surprise you. Quite a few. And to understand this dichotomy, you’d have to first understand the correct definition of exnovation.

Exnovation does not actually mean propagating a philosophy of not innovating within the organisation. Exnovation in reality means that once a process has been tested, modulated and finally super-efficiently mastered and bested within the innovative circles of any organisation, there should be a critical system that ensures that when this process is replicated across the various offices of the organisation, the process is not changed but is implemented in exactly the same manner in which it was made super-efficient; in other words, no smart alec within the organisation should be allowed to tamper with the already super-efficient process. In other words, the responsibilty of innovation should be the mandate of specialised innovation units/teams within an organisation and should ‘not’ be encouraged to each and every individual within the organisation. The logic is that not every individual is competent at innovating – yet, everybody wishes to innovate, which is what can create a doomsday scenario within any organisation. Think the case of two call centers, where credit card customers call when they wish to complain about their lost cards. Imagine one call center, where all employees are trained by exnovation managers to follow tried and tested responses and processes; imagine the other call center, where each employee is allowed independence in innovatively deciding how to respond to the calling customer’s lost card issue. Any guesses on which call center would ensure better productivity and customer satisfaction? Clearly, the one practising exnovation. And that, my dear CEOs, is the responsibility of the Exnovation units within an organisation – units staffed with managers and supervisors whose sole job it is to ensure that best practice processes and structures are followed to the tee and not tampered with within the organisation by individuals or teams without a formal mandate. Call them what you may – but any manager responsible for ensuring replication and mirror implementation of any efficient process is an exnovation manager.

And it’s a fact that CEOs and companies have thrived practising this management philosophy of exnovation. The last time this $421.85 billion- a-year topline earning company allowed each and every was much before its stock became a market-commodity on NYSE (

on October 1, 1970). Till date, its “Save money. Live better” concept is based on standard processes, followed to the hilt and marginally improved over the years, to deliver maximum productivity and efficiencies. What gives this company’s operations the push? Leveraging tested economies of scale (

a process that economists have discussed over decades), sourcing materials from lowprice suppliers (

simply put – common sense), using a well tested satellitebased IT system for logistics (

a technology that was invented in the late 1950s; today, the company’s vehicles make about 120,000 daily trips to and/or from its 135 distribution centers spread across 38 states in US alone, a count equal to the average number of vehicles that use the Lincoln Tunnel per day in New York City) and smarter financial and inventory management called ‘float’ (

the firm pays suppliers in 90 days, but plans its stocks so that it gets sold within 7 days).

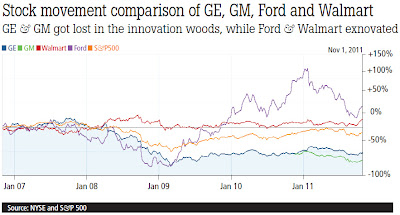

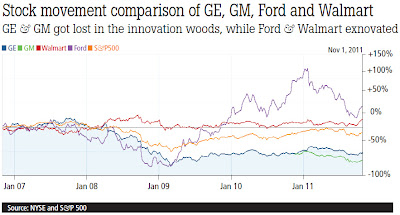

The company is #1 on the Fortune list: Walmart (

2011; it has occupied the pole position in the Fortune 500 Rankings for the eight time in ten years!). For that matter, recall the last time you heard of an innovation from Walmart. “After I came in as CEO, I looked at the world post-9/11 and realised that over the next 10 or 20 years, there just was not going to be much tailwind. It would be a more global market, it would be more driven by innovation. We have to change the company to become more innovation driven – in order to deal with this environment. It’s the right thing for investors.” Wise words from a wise CEO, spoken in the American summer of 2006, it seems. This protagonist was appointed the CEO of a large conglomerate on September 7, 2001 [

which he refers to as “the company”]. When he took over the mantle, the company having been led by his “strictly process-oriented” predecessor, had grown to become a $415 billion giant (

m-cap). So how has his “innovation-driven-change” focus worked for his investors and shareholders [

to whom he wanted to do right]? Ten years have gone by, and under him, the company has lost 58% of its value! And while America Inc. has become more profitable in the past decade, this company’s bottomline has actually gone drier by 14.91%. The first thing this innovation-lover of a CEO did when he took over control of this company was increase the company’s R&D budget by a billion dollars more and spend another $100 million in renovation of the company’s New York innovation centre. Well, loving innovation is not wrong. What is wrong is in forgetting that the best innovated products, processes and structures should not be tampered with!

In other words,

Geoffrey Immelt forgot exnovation, which his predecessor Jack Welch had mastered. Yes, I’m talking about GE. Immelt, later in an HBR paper titled, “

Growth as a process”, confessed, “I knew if I could define a process and set the right metrics, this company could go 100 miles an hour in the right direction. It took time though, to understand growth as a process. If I had worked that wheelshaped ‘execute-for-growth-process’ diagram in 2001, I would have started with it. But in reality, you get these things by wallowing in them a while. Jack was a great teacher in this regard. I would see him wallow in something like Six Sigma.” But this is not to say that Jack Welch was against innovation – in fact, he loved it; but he ensured that not everybody in the organisation was allowed to do that. Immelt’s paper does state that “under Jack Welch, GE’s managers applied their imaginations relentlessly to the task of making work more efficient. Welch created a formidable toolkit and mindset to maintain bottomline discipline.”

Whatever best practices were innovated in GE’s group companies, Welch ensured that the same were exnovated too and shared with other group companies in GE’s Crontonville Training Centre and GE’s Management Academy. And subsequently, such best practices were implemented throughout the group with a combination of commonsense and managerial

judgement. From Six Sigma to the 20-70-10 rule, Welch was all about making GE’s traditional strength – process orientation – religion for its employees. It’s easy to guess a name that Welch would have fired in his tenure at GE. What else when you have a list of over 112,000 employees to choose from? [

They were fired because they did not fit into the process-oriented culture of GE; according to a June 2011 HBR article titled, ‘You Can’t Dictate Culture – but You Can Influence It’, by Ron Ashkenas, Managing Partner of Schaffer Consulting and a co-author of The GE Work-Out, “The real turning point for GE’s transformation came when Jack Welch publicly announced to his senior managers that he had fired two business leaders for not demonstrating the new behaviours of the company – despite having achieved exceptional financial results.]

Next, tell us one innovation that Welch introduced. Difficult? In all probability, your answer will only end up defining a process he introduced at GE and ensured everyone – from his senior managers to the junior-most – followed to the hilt. Honestly, it wasn’t just innovation that created wealth on a massive scale for GE shareholders during Welch’s tenure by 2,864.29% (

to make it the world’s most valuable company; with an m-cap of $415 billion, much ahead of the world’s thensecond- most valuable Microsoft at $335 billion), it was exnovation too – perhaps more so.

Talk about a petrochemical company which is the third-largest company in the world and the highest profit-maker ever (

with $30.46 billion in bottomlines in FY2010). In the name of innovation, the last time you saw this company contribute was when it developed the naphtha steam cracking technology (

which it uses till date to refine petrochemicals) in the 1940s. Since then, there have only been modifications and improvements on this technology. Even when others had started talking about bio-fuels and innovation, this company’s CEO was adamant on continuing to invest in the technology that made what the $363.69 billion company (

m-cap as on November 1, 2011) represented in the modern world. “I am not an expert on biofuels. I am not an expert on farming. I don’t have a lot of technology to add to moonshine. What are we going to bring to this area to create value for our shareholders that’s differentiating? Because to just go in and invest like everybody else – well, why would a shareholder want to own Exxon Mobil?”, said Rex Tillerson, the Chairman & CEO of Exxon Mobil – the second-largest Fortune 500 company. And this is what Fortune Senior Writer Geoff Colvin wrote in his article titled, ‘

Exxon = oil, g*dammit!’ about Tillerson’s attitude to innovate in fuels of the future: “The other supermajors are all proclaiming their greenness and investing in biofuels, wind power and solar power. Exxon isn’t. At Exxon it’s all petroleum. Why isn’t the company investing in less polluting energy sources like biofuels, wind, and solar? Remembering that Exxon is above all in the profit business, we know where to look for the answer. As a place to earn knockout returns on capital, alternative energy looks wobbly. It’s a similar story for alternative fuels for power generation. Exxon just doesn’t know much about building dams or burning agricultural waste. Its expertise is in oil and gas.” Translation – Exxon continues to work on processes set and ignores what Tillerson calls moonshine [

read: innovative fuels].

And to talk about how efficient and bottomline focussed this system at Exxon has become, Colvin has some lines to add: “At this supremely important job, it is a world champion. All the major oil companies bear about the same capital cost, just over 6%. But Exxon earns a return that trounces its competitors. Others could be pumping oil from the same platform, and Exxon would make more money on it. It is like taking the same train to work, but they get to the office first.” Can the way the most valuable company on Earth functions be some lesson for exnovation managers? Of course.

Next, the auto majors. Since Henry Ford introduced real innovation in the industry in the form of the assembly line, the Ford Motor Company hasn’t had much to boast about in this regard. And yet, it became the only Detroit major to bounce back without a Fed bailout. And how about the real innovator? Appears, being an innovator does not pay well in the auto industry too! General Motors was ranked the #1 innovator (

among 184 companies) by The Patent Board in its automotive and transportation industry scorecard for 2011. But all this came at the cost of the company’s bottomlines which bled $76.15 billion in the seven years leading to 2010 [

and this is not considering the fateful year 2009 when GM got a fresh lease of life with the US Fed pumping-in a huge $52 billion that ultimately saved America’s innovation pride]. And what about investors? If GM has the patents and is the king of innovation, should it not have been the best bet for investors? Count the numbers and decide: if an investor had invested $100 in GM stock exactly 10 years back, he would have just $78.42 left in his trading account – a return of negative 21.58%! Had the same sum been invested in four of the other big automakers in the world, the reading would have been quite different. Investing in Ford, the investor would have gained 22.72%, in Toyota: 39.52%, in Hyundai Motors: 89.4%, and in Volkswagen: 364.32%! These are companies that focus on design and maintaining a procedure that helps create cars with set standards of quality – not innovate or lead the rush for patents in clean-energy fuels! Message for GM – instead of investing billions of taxpayers’ funds in developing green-fuel and propulsion technologies, put people on a production process that will help launch more variants of the small diesel car (

the Chevrolet Beat) for the BRIC markets. That should suffice. Exnovate – like Toyota does with its production system that follows the 5S, Kaizen and Jidoka philosophies – and create a process of continuous improvement in small increments that make the system more efficient, effective & adaptable.

In his May 2007 best-seller ‘The Myths of Innovation’, author Scott Berkun [

who had worked on the Internet Explorer development team at Microsoft from 1994-1999], using lessons from the history of innovation, breaks apart one powerful myth about innovation – popular in the world of business – with each chapter. “Competence trumps innovation. If you suck at what you do, being innovative will not help you. Business is driven by providing value to customers and often that can be done without innovation: make a good and needed thing, sell it at a good price, and advertise with confidence. If you can do those three things consistently you’ll beat most of your competitors, since they are hard to do: many industries have market leaders that fail in this criteria. If you need innovations to achieve those three things, great, have at it. If not, your lack of innovation isn’t your biggest problem. Asking for examples kills hype dead. Just say “can you show me your latest innovation?” Most people who use the word don’t have examples – they don’t know what they’re saying and that’s why they’re addicted to the i-word.”

The fundamental question really is – could airlines like Singapore Airlines, Virgin Airways, China Southern, United Airways, KLM Royal Dutch Airlines and Korean Air maintain their near 100% On-Time departure record for flights to and from India (

for Aurgust 2011; as per DGCA) had each of their management heads, employees and pilots innovated in their transactions? No. [

That would surely have disastrous consequences!] Would renowned hospitals for heart surgeries be the same safe place for patients if their doctors were to innovate their processes and dig out new surgery styles each time? No. [

Absurd!] Would Chinese steel companies like Hebei Iron and Steel, Baosteel Group, Wuhan Iron and Steel, Jiangsu Shagang and Shandong Iron and Steel Group feature in the world’s top ten volume producers of steel (

source: World Steel Organisation, 2011) had they innovated on the manufacturing method every single day? Impossibly no!

But really, I repeat ad nauseam that exnovation is not about refusing innovation within the company. Yes, a few of my examples may give off that air, but really, exnovation engenders an ideology that only some employees are gifted enough to analyse and innovate processes – and therefore such elitist employees should be placed in specialised innovation units with a sole responsibility to check processes and structures throughout the organisation and to innovatively improve them in whichever way possible. Employees who don’t have such innovative capacities may be better at simply implementing or following the processes; such employees should therefore be trained to ‘not innovate’ by exnovation managers.

The world believes that Steve Jobs was a great innovator. I would rather say he was the world’s second greatest exnovator – one who ensured that even his innovation teams had to follow a structured time driven process to come up with innovative solutions and products. And when they did, the same was exnovated across all of Apple’s divisions and offices. That was the wonder of Steve Jobs the visionary.

In the year 2003, the globally renowed management author Jim Collins wrote an iconic article for the Fortune magazine, titled

The 10 Greatest CEOs Of All Time. Jim ranked at #1 on this all time list, an individual known as Charles Coffin. Jim wrote in that articel, “Coffin oversaw two social innovations of huge significance: America’s first research laboratory and the idea of systematic management development. While Edison was essentially a genius with a thousand helpers, Coffin created a system of genius that did not depend on him. Like the founders of the United States, he created the ideology and mechanisms that made his institution one of the world’s most enduring and widely emulated.” If this is not one of the greatest combinations of innovation with exnovation, then what is? The institution Coffin co-founded with Edison was GE. Coffin passed away in 1926. Till date, he remains for me the world’s greatest exnovator.